Opinion pieces and reporting about the decline in male readership are afflicted at the outset by a basic epistemological hang-up: how would we even assess this? Neither survey data nor shopping analytics seem scientifically sound, given that they will be skewed by some form of selection bias. In the case of surveys, both the distribution of and response to surveys are potential fault points. As for sales data, it seems obvious upon reflection that there is no necessary correlation between buying books and reading them in a world where a) some purchases are purely performative and b) one needn’t purchase new books to be a serious or thoughtful reader. I suspect that what accounts for the every-few-months greenlighting of a new article about the death of reading among men (or the youth) is based largely on what contemporary parlance nebulously calls vibes.

A cursory glance at the world’s most famous men does indeed reveal a lack of literacy. It’s hard to imagine any of the men in the broader manosphere settling down with a chunk of literary heft, nor do our testosteronal titans of industry and penis-swinging politicians great exemplars of the bibliomania which we wistfully imagine that previous, more enlightened lords of the world were. On a more proletarian scale, you may find yourself struggling to think of many ‘regular guys’ you know among the dudes, chads, chuds, bros, and douches encountered in daily life burning with desire for ocular application to the printed word. Indeed, of the men whom I know, I can think of only one who has any thought for literature at all.

But this is all anecdotal impressionism, not the kind of foundation on which to construct a superstructure of moral panic. Perhaps, after all, what is really happening is that reading and literature have ceased to be a locus of virility in the way that they were in the pre-digital age. Could one imagine Samuel Johnson’s declaration about his reading being taken at face value if made today?

“What he read during these two years he told me, was not works of mere amusement, ‘not voyages and travels, but all literature, Sir, all ancient writers, all manly: though but little Greek, only some of Anacreon and Hesiod; but in this irregular manner (added he) I had looked into a great many books, which were not commonly known at the Universities, where they seldom read any books but what are put into their hands by their tutors; so that when I came to Oxford, Dr. Adams, now master of Pembroke College, told me I was the best qualified for the University that he had ever known come there.”

I myself was taken aback by Martin Amis’ note in Experience that Iris Murdoch was his favorite ‘woman writer.’ What could this imply other than that, even in the mind of a self-declared feminist writing at the end of the 1990’s, literature still seemed like a primarily male preserve?

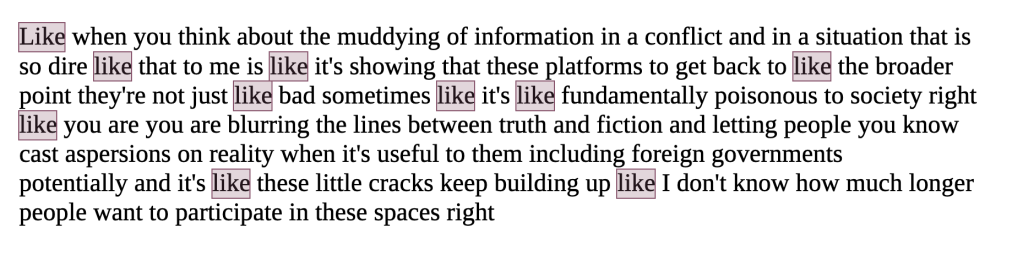

Tyler Cowen is a man. Moreover, he is a man who reads a lot, and I have seen him discuss literature with sensibility and a certain depth of appreciation. Nevertheless, he has also claimed that he finds Twitter more ‘information dense’ than books. This seems like an odd argument to make in light of how much disinformation and frivolity has always characterized Twitter, even well before the days of Musk. (There is a tendency now to nostalgically sentimentalize an earlier incarnation of the platform as a great place for journalists and academics to engage in open information sharing, but I seem to recall three distinct phases of Twitter: 1) mid-aughts dismissal of the platform as geared toward triviality; 2) mid-teens mass adoption characterized by childish arguments, blocking, pile-ons, and retreats from the platform for the recomposure of mental health; 3) degradation into mostly porn, misogyny, and Musk-favored talking points.) Perhaps Cowen’s point is that the limitations of attention online and the old restrictions imposed by character limits meant that there was less fluff tolerance than in other media, but given that these nuggets of information density were surrounded by irrelevant mental effluvia, it’s not clear that there would have been any more ‘density’ here than in any of the baggiest monsters.

But why am I even addressing this point? No book, excepting works of reference, is meant simply to carry information. In the case of books on science, economics, philosophy, history, sociology, etc. the information is presented as a part of a larger argument which you are meant to follow as you progress through the volume. Returning to Martin Amis, and his novel aptly titled for our purposes The Information, he once said, when asked what he was trying to say with the novel that it took him several hundred pages to say it – that is, the novel is what he was ‘trying to say.’

In the case of literature, though – whether it be poetry, novels, or even narrative non-fiction – the point is not to receive information, but to have an experience. You may learn something when reading a novel, or you may not. Learning is entirely irrelevant to the process. No one assesses music on the basis of sonic density, on the amount of sound that can be crammed into a given amount of time. No one looks at a painting for chromatic density. It is the mark of the most debased philistinism to suppose that the point of reading is simply to extract encoded information and download it into your own neural server. I have spent more of my waking hours as an adult reading than on any other individual activity, and yet I remember only a trivial fraction of the ‘information’ contained in those books, even if I vividly recall the experience that I had while reading them. But in truth, I have also forgotten most of the actual events which I have experienced in my own life. If I thought of reading as an activity structured around the acquisition of information, I too might come to the conclusion that it was, if not wasted time, then at least a poor use of it.

So, are men reading less than they used to? Perhaps. I am not entirely unpersuaded by the vibes-based argument, given that it does seem that men talk about sports, videogames, TV, and working out far more than they do about books. But then again, so does all of society. There was a brief period spanning a good chunk of the 19th and 20th centuries during which literacy was increasing and literature had a certain operative force within the world. This period was also one in which the literary firmament was dominated by a lot of titanic male egos who seemed to have the same ability to distort parts of reality the way that tech bros do today – maybe men just flocked to books because it seemed like a manly enough thing to do. But as our dominant paradigm for exploring, commenting upon, and shaping the world shifted from the literary to the technical, maybe the boys’ club just shifted its venue.

This is all by way of saying: who really cares whether men are reading or not? They tyrannized over the literary kingdom for quite a long time. Moreover, it’s hard to recall a time when hand-wringing over some demographic/intellectual trend did much to alter the trend in question. Whether driven by data or purely by vibes, the panic over male readership misses a salient point: reading is a quiet and fundamentally solitary activity, something which, wherever one does it, is still taking place entirely within one’s head. In a world driven by sharing evidence of our identity through immediately intelligible outward display, such a lonely pursuit within one’s private phenomenology cannot compete for attentional space. You can watch a livestream of people eating, working out, playing videogames; but one would be hard-pressed to imagine tuning in to someone reading quietly to themselves, and indeed the very act of televising it would cast suspicion on one’s commitment to the activity of reading itself. Perhaps we’ll regret the day when mass male reading makes a return and it becomes just another obnoxious pissing contest.